Pitfalls of Assessments

- Zherin Literte

- Oct 3, 2020

- 3 min read

After years of working with assessments, this continues to be my favorite topic or part when I do teacher training or consultation. It makes me smile to those wondering faces when I start asking this question: "What are you really assessing?" A very straightforward yet provocative question could make teachers think through.

After a lot of teachers go through the UBD framework of designing lessons, I was hoping not to see any more rubric criteria that start from making up activities, performance tasks before actually planning and creating another rubric for the summative. Sadly, some teachers still design their tasks like this. The result has become a dead-end for students. Students will never really have adequate opportunities to develop or produce the expected output because the final output is far from what they have done over the past few weeks or months. Other than this, there are more interesting pitfalls that students are experiencing.

1. Alignment of the Summative Task to the Formative Tasks

Ever wonder why some students still get a low-quality output, despite the number of activities or time frame given to them. Most of the time, if you ask students, they do not know what is being asked of them and what is the expected output. They just go through the weeks complying, submitting whatever is asked of them. Towards the end, students start to cram and panic because their teacher/s will start presenting the final performance task. A task that was supposed to be "familiar" at least because they have done for several weeks. Unfortunately, the familiar feeling is not there, because what they see is a totally new activity or task that they do not even know how to start or go about. Sounds familiar?

2. Vague criteria indicators

This is my main "catch" for teachers who undergo initial training. I get various reactions that excite me even more. It's like being in an investigative interview where you keep asking the same or similar questions to check for consistency.

One of the most common examples in the field of teaching music is assessing "being able to sing in tune" vs "being able to sing in perfect pitch." When we ask students to sing, are we really listening to their ability to carry a tune regardless of the key and/or range or do we really like them to sing the perfect pitch for each note in standard tuning? There is a difference if you understand what I mean.

I have also worked with teachers who are assessing an instrumental playing, then the feedback is geared towards how the student was singing when he was playing the instrument. Once more, the line must be clear. Are you assessing his ability to play the instrument or his ability to sing well and coordinate his singing with the instrument? Lots of layers here, but we do need to be specific.

3. Lack of OBJECTIVITY through weak use of descriptive modifiers

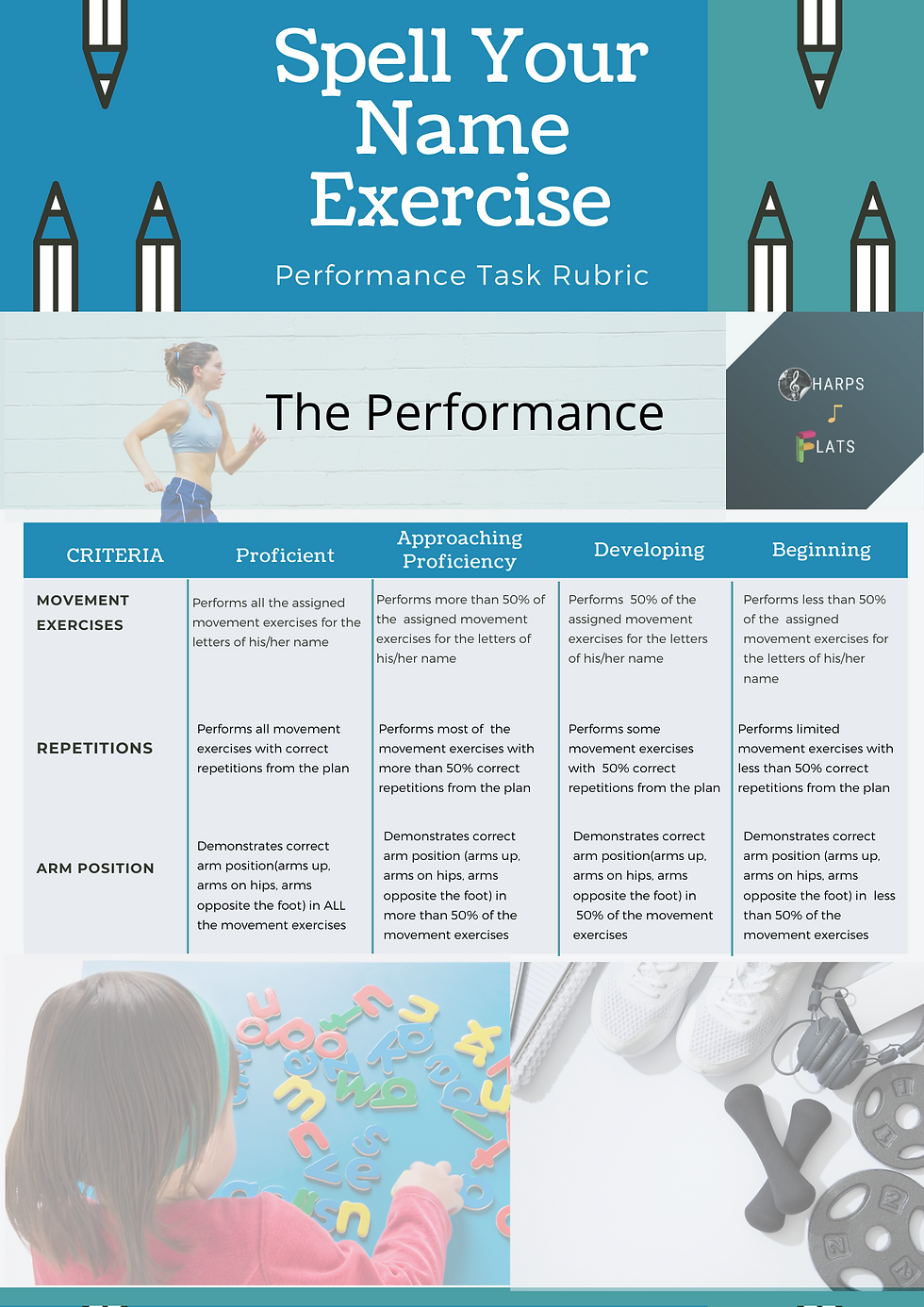

Quantifiers like "most", "some", "sometimes" etc. absolutely differ from one person to another. It is better to be more specific and measurable, so students will have a higher chance of achieving better results. Below is an example of how a good rubric can serve the purpose. When this is explicitly used all throughout the formative process, and applied on during the summative phase, both the students and teachers will meet their end goal.

The title of the activity is "Spell your Name Exercise"

Sample 1

This example rubric criteria is most commonly used by teachers wherein details are overlapping with what the teacher really wants to assess. Usually, the argument is between quantity or quality.

Another argument to this example is frequency vs. accuracy.

When we give these vague and overlapping indicators, it will become harder for students to meet the success criteria while on the teacher's end, checking will always have a dilemma as the rubric is vague in itself.

Sample 2

This is the revised version of the rubric above. Notice that the specific details are explicitly articulated to the students so the students will have a clear idea of what we are looking for.

Also here, the quantifiers are specific enough and consistent enough to show which one has more value than the other, and how to strike a balance between the two indicators.

4. The MISSING LINK

Other than alignment, one of the huge factors in designing assessments represent not only the design itself but the process. The process of which does not end in presenting these to the students, but being with them "in the process" of learning them through the feedback that we give them. Students should be able to perceive how they are making progress themselves.

The culture of this open communication and trust starts from the beginning. Without feedback, assessment is far from authenticity and validity.

As teachers we have to remember, we are not a judge but a guide who walks with our students throughout the learning journey.

Comments